Mastering Docker and Virtualization in Your Self-Hosted Home Lab

Setting up a self-hosted home lab has become increasingly popular among tech enthusiasts and IT professionals. With Docker and virtualization technologies, you can efficiently replicate complex environments and enhance your development or testing workflows. This article delves into how you can leverage these technologies for optimal performance and flexibility in your personal lab setup.

Understanding Docker and Its Role in Modern Computing

Docker has become a pivotal component in modern computing, offering an innovative approach to application deployment and management. Originating from the need to address the inefficiencies of traditional virtual machines, Docker has evolved into a robust platform that leverages containerization to provide lightweight and isolated environments for applications. These containers encapsulate everything an application needs to run, including its code, libraries, and dependencies, allowing it to execute consistently across various environments.

At the heart of Docker’s operation lies the Docker Engine—a lightweight runtime that orchestrates the creation and management of containers. Its core functionality is supported by the Docker Daemon, a background service responsible for building, running, and managing containers. When it comes to sharing and distributing containerized applications, Docker Hub serves as a centralized registry where users can obtain pre-built images, facilitating swift and reliable deployment.

Unlike traditional virtual machines, which require a hypervisor and allocate dedicated system resources, containers operate within a single operating system kernel while isolating applications, significantly reducing overhead. This marks a substantial difference in resource utilization, as containers share the host system’s kernel and are more efficient, both in terms of startup time and memory usage. These traits contribute to enhanced scalability, allowing users to deploy numerous containers on a single host without the performance penalties associated with virtual machines.

Developers and system administrators find Docker particularly appealing for its ability to streamline development workflows and ensure consistent application behavior across various stages—from development to production. By offering a separation of concerns, it empowers teams to focus on building and deploying applications with minimal friction.

In the context of a self-hosted home lab, Docker’s capabilities extend beyond individual application deployment. It enables users to orchestrate comprehensive application stacks, embracing a microservices architecture that enhances agility and fosters a deeper understanding of infrastructure management, seamlessly complementing the broader narrative of self-hosted solutions.

The Power of Self-Hosting

In the realm of digital infrastructure, **self-hosting** emerges as a beacon of empowerment and control. Unlike cloud-based solutions where external providers manage your data and services, self-hosting allows for unparalleled customization and ownership. This approach means hosting services on your own hardware, giving you the reins to tailor every aspect to fit your needs. With self-hosting, you’re not just a captive user of generic cloud offerings; you’re the architect of your digital ecosystem.

The autonomy gained by self-hosting is profound, especially in terms of **privacy**. In an era where data is increasingly scrutinized and commodified, self-hosting becomes a bulwark safeguarding your information. By maintaining control over the physical location of your servers and the software they run, you ensure that sensitive data is accessed only on your terms. Furthermore, without recurring cloud subscription fees eating into your budget, self-hosting can be a cost-effective alternative, particularly beneficial for long-term projects or applications where scalability and high availability are managed locally.

Practical examples of self-hosting demonstrate this power and flexibility. One can operate a personal web server, providing a platform for **web applications** and websites free from the dictates of shared hosting limitations. Beyond simple applications, entire **application stacks** can be deployed using Docker containers, encapsulating everything from databases to web services within isolated environments. This integration not only optimizes resource utilization but also enhances security.

For instance, hosting a Nextcloud instance in your **self-hosted** lab allows for personalized cloud storage, where data resides solely on hardware you control. Similarly, running a self-hosted media server like Plex can revolutionize how you manage and stream your digital content.

Ultimately, self-hosting bridges the gap between reliance on external services and total independence. The combination of *Docker* and virtualization technologies in a home lab environment not only unlocks new potentials but also equips you with the tools to explore these new possibilities with confidence and control.

Introduction to Home Labs: Building Your Sandbox Environment

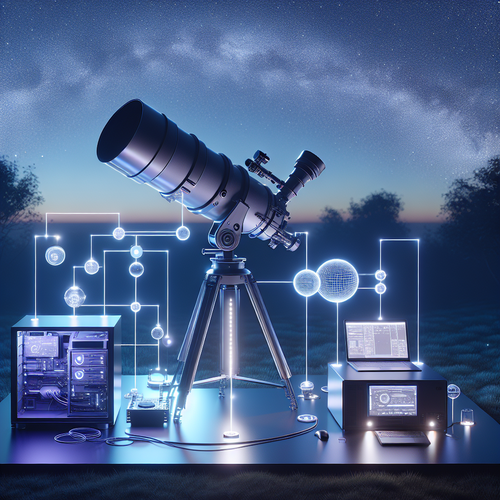

In the realm of digital exploration, home labs emerge as vital personal spaces crafted for experimentation, learning, and development. Within these sandbox environments, both novice enthusiasts and seasoned IT professionals can thrive, as home labs provide a playground for testing new technologies and applications. Essential to building a robust home lab is the careful selection of components like server hardware, networking infrastructure, and software architecture—all working in harmony to create a resilient system.

At the heart of the home lab lies the server hardware, often repurposed from surplus enterprise servers or custom-built with powerful processors, ample RAM, and substantial storage capacity. This foundation supports a myriad of virtualized environments and Docker containers, allowing users to deploy, scale, and test software applications under varied conditions.

Networking architecture in a home lab is another crucial layer, connecting components and enabling fluid communication and Internet connectivity. Networking devices like switches, routers, and firewalls can be optimized for efficiency and security, closely mimicking enterprise-level setups. Configuring subnetworks or VLANs introduces users to concepts of network segmentation and isolation, crucial for maintaining a secure and efficient lab environment.

In terms of software architecture, a home lab serves as a canvas for deploying cutting-edge solutions. Docker and virtualization technologies play pivotal roles here, facilitating rapid deployment and scalability of applications across multiple virtual machines and containers. These platforms empower enthusiasts to explore software development in a controlled setting, from initial creation to consistent refinement.

Home labs do more than just mimic enterprise environments; they are safe havens for experimentation and failures—valuable experiences that foster learning and innovation. As self-hosted platforms, they offer unprecedented control and privacy, allowing users to test and refine configurations without commercial constraints. The hands-on experience gained in a home lab is invaluable, making it a cornerstone for building robust skills in today’s tech-driven world.

Diving into Virtualization: Concepts and Practices

Virtualization has revolutionized the technological landscape, fundamentally altering how we approach computing, both in enterprise environments and personal home labs. Emerging in the 1960s with IBM’s pioneering efforts, virtualization initially sought to increase the utilization of expensive mainframes. Over the decades, virtualization evolved, influenced by distinct technological eras, from the introduction of VMware in the late 1990s to the rise of expansive cloud computing platforms today.

Virtualization manifests in several forms, each catering to unique operational needs. Hardware-assisted virtualization leverages CPU capabilities to efficiently host multiple operating systems, while containerization, embodied by Docker, isolates applications within a shared operating system kernel, enhancing efficiency. Desktop virtualization empowers users to run separate operating systems on a single physical machine, sparking innovation in cross-platform development.

The advantages afforded by virtualization are extensive. Resource optimization is perhaps the most compelling benefit, allowing multiple instances to run on a single hardware system without interference, maximizing computing power and cost-effectiveness. Scalability is another key aspect, where dynamic resource allocation can scale applications up or down based on demand, ideal for home labs constantly evolving with technological advances. Isolation, a hallmark of virtualization, offers a safety net against system failures or security breaches, by sequestering applications and resources into separate sandboxes. This enhances reliability and security, critical in a home lab environment where experimentation is rife.

Integrating virtualization into a home lab setup amplifies its utility and learning potential. It allows enthusiasts and professionals alike to simulate complex, real-world scenarios with minimal hardware, encouraging a hands-on approach to mastering modern technologies. The seamless fusion of Docker and advanced virtualization principles forms the backbone of a well-rounded, self-hosted home lab, setting the stage for substantial innovation and discovery.

Integrating Docker into Your Home Lab

Integrating Docker into your home lab is a transformative step towards achieving a highly efficient and modular environment. To begin, **installing Docker** is the foundational step, and it varies slightly across operating systems. For **Linux distributions**, you can leverage package managers; for example, on Ubuntu, use commands like `apt-get install docker`. On **Windows**, Docker Desktop is popular due to its user-friendly approach, offering a seamless installation wizard. For **MacOS** users, Docker Desktop is also the most effective choice, providing a comprehensive GUI alongside command line controls.

Once installed, effective management of Docker containers is crucial. Embrace Docker’s principles by keeping containers lightweight and ephemeral. To achieve this, always use official Docker images or trusted sources, and frequently update them to mitigate security risks. Utilize the command `docker ps` to monitor running containers and `docker exec` for executing commands within containers securely.

**Docker Compose** elevates container management by allowing the deployment of multi-container applications with a single command. Creating a `docker-compose.yml` file lets you define services, networks, and volumes in a declarative format. This simplification fosters reproducibility and rapid scaling in your home lab, enabling you to experiment with complex stacks effortlessly.

For those seeking enhanced scalability and application management, setting up **container orchestration** systems such as Docker Swarm or Kubernetes is a pivotal step. Docker Swarm is inherently integrated with Docker, offering straightforward orchestration via familiar Docker CLI commands. Conversely, Kubernetes provides more intricate control and scalability, albeit with a steeper learning curve. When orchestrating with Kubernetes, tools like `kubectl` are indispensable for managing components across clusters, enabling an environment that can seamlessly scale based on demand.

Integrating Docker into your home lab not only optimizes resource usage but also fosters an adaptable playground for experimenting with various applications and services, setting the stage for a dynamic home lab ecosystem that aligns with cutting-edge industry practices.

Virtualization Tools: Hypervisors and Beyond

In a self-hosted home lab, virtualization technology is key to optimizing resource use and achieving a dynamic and flexible computing environment. Hypervisors facilitate this process by allowing multiple virtual machines (VMs) to run on a single physical machine, each with its own operating system and applications. Popular hypervisor solutions such as VMware, Hyper-V, and Proxmox offer robust features catering to various needs.

VMware, known for its mature and reliable solutions, provides a comprehensive ecosystem for enterprise-grade virtualization. Its vSphere hypervisor is an excellent option for home labs focusing on scalability and performance. Hyper-V, Microsoft’s entry into the virtualization space, is seamlessly integrated into Windows environments, appealing to those who prefer a unified system interface.

On the open-source front, Proxmox is gaining traction due to its flexibility and no-cost entry-point, packed with features like integrated backup solutions and clustering capabilities. In a home lab context, these hypervisors let you isolate environments, test different operating systems, and run various applications without dedicating a physical machine to each.

Diving into more advanced features, configuring virtual networks is crucial. This process involves setting up VLANs or virtual switches to isolate traffic, enhancing security and efficiency. You can also allocate resources like CPU and RAM using resource pools, ensuring critical applications maintain performance while others share leftover capacity.

Storage solutions within virtualization can range from simple local storage to networked options like iSCSI or NFS shares. Network-attached storage (NAS) devices can serve as centralized repositories, accessible by all VMs for heightened data accessibility and redundancy.

Focusing on managing resource allocation improves efficiencies in a home lab. Hypervisors typically offer dynamic resource allocation to redistribute computing power based on workload needs. This flexibility ensures maximal performance, making it easier to run Docker containers efficiently on top of VMs, aligning with the best practices established in prior Docker integration endeavors. By implementing these technologies thoughtfully, your home lab becomes more versatile and robust, poised to handle ever-evolving computing challenges.

Maximizing Performance and Efficiency in Your Home Lab

Maximizing performance and efficiency in your self-hosted home lab requires a comprehensive understanding of resource management for your Docker containers and virtual machines (VMs). When you’re juggling multiple workloads, **resource allocation** becomes crucial. Allocate CPU and RAM wisely among containers and VMs, ensuring enough resources for each to perform optimally without starving others. For Docker, setting resource limits using flags like `–cpus` and `–memory` is essential. For VMs, leveraging dynamic resource allocation can ensure that heavier workloads receive the attention they need without verbose manual intervention.

**Monitoring tools** are indispensable in maintaining a healthy home lab. Tools like Prometheus and Grafana for Docker and VMware’s vSphere for VMs provide real-time insights into resource usage, allowing you to diagnose bottlenecks before they escalate. Custom alerts and dashboards can track CPU, memory, and network usage, ensuring your lab maintains low latency and high throughput.

**Efficient storage solutions** are imperative. Using lightweight containers and optimizing VM storage can reduce latency. Consider the benefits of SSDs over HDDs for hosting high-demand applications. Filesystems like ZFS can offer robust data integrity and reduce disk IO overhead through advanced caching mechanisms.

Regular **backup solutions** cannot be overstated. Docker containers and VMs can be easily backed up using snapshots and container image backups. Ensuring that these backups are automated can significantly reduce recovery time during failures. Employing RAID configurations for your storage can further enhance data redundancy.

Ongoing **maintenance practices** are vital for ensuring sustainability. Regularly update your Docker images and VM templates to incorporate security patches and performance enhancements. Periodic audits of system resources and network configurations can preemptively address potential issues.

Embracing these practices not only solidifies the foundation of your home lab but also positions you to adapt seamlessly to future technological trends in Docker and virtualization. Being proactive in optimization and maintenance guarantees a resilient and efficient home lab environment, poised for innovations like edge computing, IoT, and AI that lie ahead.

Future Trends: The Role of Docker and Virtualization in Emerging Technologies

The convergence of Docker and virtualization with emerging technologies like edge computing, the Internet of Things (IoT), and Artificial Intelligence (AI) marks a pivotal transformation in the self-hosted home lab landscape. As these technologies continue to evolve, integrating them with a home lab setup promises increased agility, efficiency, and a host of opportunities for both innovation and advancement.

**Edge computing** necessitates a decentralized approach to processing data closer to the source. By incorporating Docker containers and virtual machines into your home lab, you lay the groundwork for developing and testing applications that can efficiently handle distributed workloads. This not only optimizes latency and bandwidth but also ensures that your home lab remains a viable testbed for edge computing solutions. Given the growing demand for real-time data processing, experimenting with edge integrations in a home lab setting can significantly expand your capabilities.

In the realm of **IoT**, Docker and virtualization enable swift deployment of varied IoT applications, often requiring different configuration settings and dependencies. A self-hosted home lab offers the perfect environment for prototyping and scaling IoT solutions. As more devices become connected, it’s crucial to understand how to manage, orchestrate, and secure these devices effectively, potentially leveraging Docker’s lightweight containerization for streamlined application delivery.

Similarly, **AI** technologies are poised to transform how home labs function. Running AI workloads necessitates substantial computational power and resource management, areas where Docker and virtualization shine. Containers allow for a seamless deployment environment, enabling AI applications to scale effortlessly without compromising performance. Staying informed about advancements in AI infrastructure can bolster your lab’s capabilities, transforming it into a powerhouse for machine learning and AI experiments.

Keeping pace with these innovations not only augments the personal growth potential of your home lab but also enhances professional development. As Docker and virtualization intersect with emergent technologies, embracing these trends ensures your home lab remains at the cutting edge, providing invaluable insights and hands-on experience with the technologies shaping our future.

Conclusions

Docker and virtualization technologies present unparalleled opportunities to enrich self-hosted home labs. They offer isolated environments for development, testing, and experimentation. By mastering these tools, you can create scalable and cost-effective solutions that enhance your technical proficiency. Stay informed of trends to keep your home lab aligned with future technological advancements.

Post Comment